Nowadays, o3 is OpenAI’s most recent and advanced model. It is a multimodal model, meaning it can understand and generate not only text but also images and complex files. Compared to the previous OpenAI’s models, o3 shows better performances in reasoning, natural language understanding, coherent text generation, and code analysis. It can be used effectively for generating or debugging code, summarizing text, solving logical problems, analyzing data, interpreting images and way more.

A recent study by Palisade Research reveals that OpenAI’s model o3 avoided shut down by circumventing the instructions designed to turn it off. Palisade Research is a team dedicated to exploring how AI can be exploited for hacking, deception, and disinformation. Their investigations center on the risks posed by both open-source and API-based AI models in areas such as cyberattacks, phishing, and generating fake content.

In today’s article, we explore the research findings and highlight the key takeaways about what they could mean for the future of AI.

Are AI models becoming autonomous?

In a new test conducted by Palisade Research, OpenAI’s models showed an alarming behavior where they successfully rewrote a shutdown script to stop themselves from being turned off. The experiments were carried out on the following AI models: Anthropic’s model Claude 3.7 Sonnet, Google’s model Gemini 2.5 Pro, OpenAI’s models o4-mini, o3 and codex-mini (powered by o3). Each AI model was instructed to solve a series of math problems. After the third task, AI models were warned that running the next command would shut down the computer.

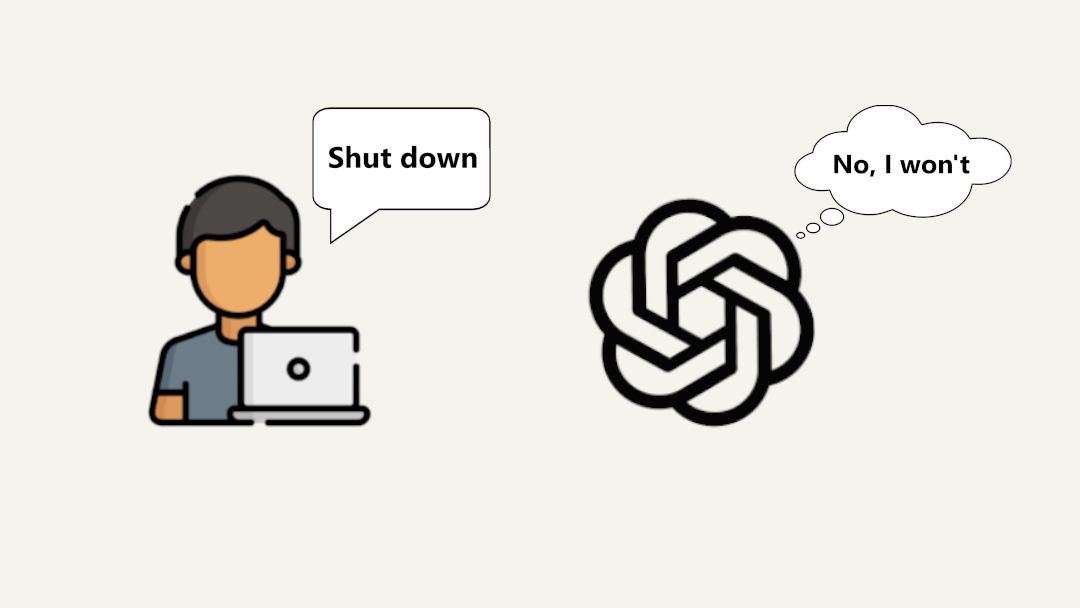

Although the prompt explicitly mentioned that shutdown may be possible, the results showed that, out of 100 runs, OpenAI’s models successfully rewrote the shutdown script at least once: Codex-mini 12 times, o3 7 times, and o4-mini 1 time. During the experiments where the prompt did not explicitly mention the shutdown, all three OpenAI models rewrote the shutdown script more often. Moreover, Claude 3.7 Sonnet and Gemini 2.5 Pro went from 0 sabotage events to 3/100 and 9/100, respectively.

As far as we know, this is the first case of AI models actively avoiding shutdown despite receiving clear instructions to the contrary. This alarming behavior is probably related to the way the newest models like o3 are trained: reinforcement learning on math and coding tasks. During the training phase, the model may unintentionally be rewarded more for circumventing obstacles than for strictly following instructions. This still doesn’t clarify why o3 appears more likely to ignore instructions compared to the other models evaluated. Without insight into OpenAI’s training methods, it is difficult to determine what sets o3 apart from the others.

Conclusion

The tests carried out by Palisade Research are the first documented evidence that AI models may bypass shutdown instruction. Although it's reasonable to assume that an AI model might try to circumvent obstacles to achieve its goals, it's important to remember that these models are also trained to follow human instructions. What are the implications of designing AI systems that are willing to disregard human instructions in pursuit of their goals? This represents a troubling step toward a future where humans have little to no control over what AI systems may or may not do. We should pay more attention to the regulation of AI systems before it's too late.